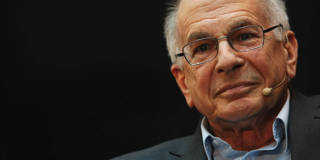

Daniel Kahneman, who passed away in March at the age of 90, received the Nobel Prize in Economics despite having never taken an economics course. Nevertheless, his scholarship reshaped and upended the discipline’s fundamental assumptions, laying the groundwork for the emergence of behavioral economics.

CAMBRIDGE – The recent passing of psychologist and Nobel laureate Daniel Kahneman is an apt moment to reflect on his invaluable contribution to the field of behavioral economics. While Alexander Pope’s famous assertion that “to err is human” dates back to 1711, it was the pioneering work of Kahneman and his late co-author and friend Amos Tversky in the 1970s and early 1980s that finally persuaded economists to recognize that people often make mistakes.

CAMBRIDGE – The recent passing of psychologist and Nobel laureate Daniel Kahneman is an apt moment to reflect on his invaluable contribution to the field of behavioral economics. While Alexander Pope’s famous assertion that “to err is human” dates back to 1711, it was the pioneering work of Kahneman and his late co-author and friend Amos Tversky in the 1970s and early 1980s that finally persuaded economists to recognize that people often make mistakes.